Note

Go to the end to download the full example code.

Acoustic Human Activity Recognition Tutorial¶

!pip install pysensing

In this tutorial, we will be implementing codes for acoustic Human activity recognition

import torch

torch.backends.cudnn.benchmark = True

import matplotlib.pyplot as plt

import numpy as np

import pysensing.acoustic.datasets.har as har_datasets

import pysensing.acoustic.models.har as har_models

import pysensing.acoustic.models.get_model as acoustic_models

import pysensing.acoustic.inference.embedding as embedding

seed = 42

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

np.random.seed(seed)

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

SAMoSA: Sensoring Activities with Motion abd Subsampled Audio¶

SAMSoSA dataset is designed to use audio and IMU data collected by a watch to predict the actions of the users.

There are totally 27 actions in the dataset.

In the library, we provide a dataloader to use only audio data to predict these actions.

Load the data¶

# Method 1: Use get_dataloader

from pysensing.acoustic.datasets.get_dataloader import *

train_loader,test_loader = load_har_dataset(

root='./data',

dataset='samosa',

download=True)

# Method 2: Manually setup the dataloader

root = './data' # The path contains the samosa dataset

samosa_traindataset = har_datasets.SAMoSA(root,'train')

samosa_testdataset = har_datasets.SAMoSA(root,'test')

# Define the dataloader

samosa_trainloader = DataLoader(samosa_traindataset,batch_size=64,shuffle=True,drop_last=True)

samosa_testloader = DataLoader(samosa_testdataset,batch_size=64,shuffle=True,drop_last=True)

dataclass = samosa_traindataset.class_dict

datalist = samosa_traindataset.audio_data

# Example of the samples in the dataset

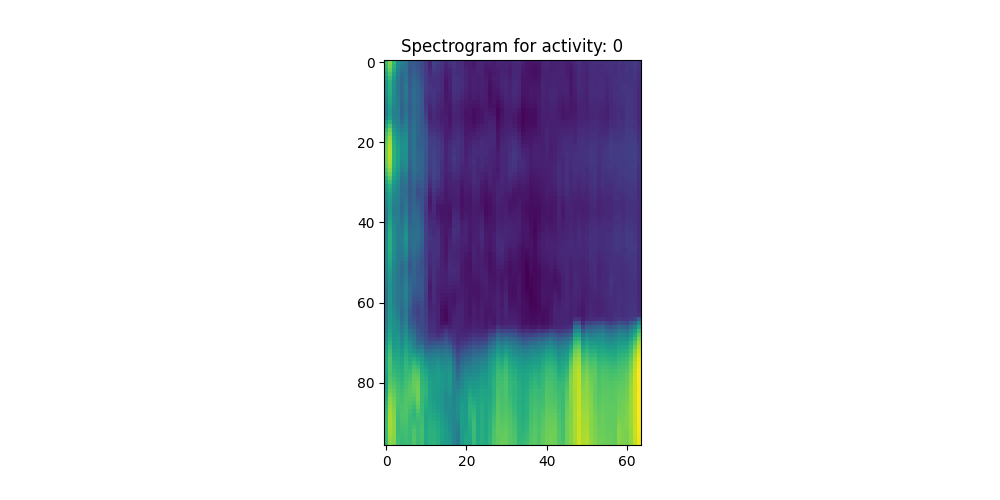

index = 50 # Randomly select an index

spectrogram,activity= samosa_traindataset.__getitem__(index)

plt.figure(figsize=(10,5))

plt.imshow(spectrogram.numpy()[0])

plt.title("Spectrogram for activity: {}".format(activity))

plt.show()

using dataset: SAMoSA

Load the model¶

# Method 1:

samosa_model = har_models.HAR_SAMCNN(dropout=0.6).to(device)

# Method 2:

samosa_model = acoustic_models.load_har_model('samcnn',pretrained=True).to(device)

Model training and testing¶

from pysensing.acoustic.inference.training.har_train import *

# Model training

epoch = 1

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(samosa_model.parameters(), 0.0001)

har_train_val(samosa_model,samosa_trainloader,samosa_testloader, epoch, optimizer, criterion, device, save_dir = './data',save = True)

# Model testing

test_loss = har_test(samosa_model,samosa_testloader,criterion,device)

Train round0/1: 0%| | 0/78 [00:00<?, ?batch/s]

Train round0/1: 1%|▏ | 1/78 [00:00<00:16, 4.58batch/s]

Train round0/1: 4%|▍ | 3/78 [00:00<00:07, 9.89batch/s]

Train round0/1: 6%|▋ | 5/78 [00:00<00:05, 12.70batch/s]

Train round0/1: 9%|▉ | 7/78 [00:00<00:05, 14.14batch/s]

Train round0/1: 12%|█▏ | 9/78 [00:00<00:04, 15.14batch/s]

Train round0/1: 14%|█▍ | 11/78 [00:00<00:04, 15.64batch/s]

Train round0/1: 17%|█▋ | 13/78 [00:00<00:04, 15.58batch/s]

Train round0/1: 19%|█▉ | 15/78 [00:01<00:04, 15.71batch/s]

Train round0/1: 22%|██▏ | 17/78 [00:01<00:03, 16.24batch/s]

Train round0/1: 24%|██▍ | 19/78 [00:01<00:03, 15.73batch/s]

Train round0/1: 27%|██▋ | 21/78 [00:01<00:03, 14.38batch/s]

Train round0/1: 29%|██▉ | 23/78 [00:01<00:04, 13.69batch/s]

Train round0/1: 32%|███▏ | 25/78 [00:01<00:03, 13.40batch/s]

Train round0/1: 35%|███▍ | 27/78 [00:01<00:04, 12.56batch/s]

Train round0/1: 37%|███▋ | 29/78 [00:02<00:04, 12.16batch/s]

Train round0/1: 40%|███▉ | 31/78 [00:02<00:03, 12.39batch/s]

Train round0/1: 42%|████▏ | 33/78 [00:02<00:03, 12.36batch/s]

Train round0/1: 45%|████▍ | 35/78 [00:02<00:03, 12.68batch/s]

Train round0/1: 47%|████▋ | 37/78 [00:02<00:03, 12.69batch/s]

Train round0/1: 50%|█████ | 39/78 [00:02<00:03, 12.52batch/s]

Train round0/1: 53%|█████▎ | 41/78 [00:03<00:02, 12.58batch/s]

Train round0/1: 55%|█████▌ | 43/78 [00:03<00:02, 12.93batch/s]

Train round0/1: 58%|█████▊ | 45/78 [00:03<00:02, 12.98batch/s]

Train round0/1: 60%|██████ | 47/78 [00:03<00:02, 12.55batch/s]

Train round0/1: 63%|██████▎ | 49/78 [00:03<00:02, 12.89batch/s]

Train round0/1: 65%|██████▌ | 51/78 [00:03<00:02, 12.83batch/s]

Train round0/1: 68%|██████▊ | 53/78 [00:04<00:01, 12.51batch/s]

Train round0/1: 71%|███████ | 55/78 [00:04<00:01, 11.63batch/s]

Train round0/1: 73%|███████▎ | 57/78 [00:04<00:01, 11.57batch/s]

Train round0/1: 76%|███████▌ | 59/78 [00:04<00:01, 11.80batch/s]

Train round0/1: 78%|███████▊ | 61/78 [00:04<00:01, 11.83batch/s]

Train round0/1: 81%|████████ | 63/78 [00:04<00:01, 12.32batch/s]

Train round0/1: 83%|████████▎ | 65/78 [00:05<00:01, 12.08batch/s]

Train round0/1: 86%|████████▌ | 67/78 [00:05<00:00, 12.29batch/s]

Train round0/1: 88%|████████▊ | 69/78 [00:05<00:00, 12.72batch/s]

Train round0/1: 91%|█████████ | 71/78 [00:05<00:00, 12.69batch/s]

Train round0/1: 94%|█████████▎| 73/78 [00:05<00:00, 12.63batch/s]

Train round0/1: 96%|█████████▌| 75/78 [00:05<00:00, 12.81batch/s]

Train round0/1: 99%|█████████▊| 77/78 [00:05<00:00, 13.03batch/s]

Epoch:1, Accuracy:0.7600,Loss:0.735948420

Test round: 0%| | 0/30 [00:00<?, ?batch/s]

Test round: 7%|▋ | 2/30 [00:00<00:01, 15.33batch/s]

Test round: 13%|█▎ | 4/30 [00:00<00:01, 16.07batch/s]

Test round: 20%|██ | 6/30 [00:00<00:01, 16.48batch/s]

Test round: 27%|██▋ | 8/30 [00:00<00:01, 15.80batch/s]

Test round: 33%|███▎ | 10/30 [00:00<00:01, 16.28batch/s]

Test round: 40%|████ | 12/30 [00:00<00:01, 15.69batch/s]

Test round: 47%|████▋ | 14/30 [00:00<00:00, 16.05batch/s]

Test round: 53%|█████▎ | 16/30 [00:00<00:00, 17.05batch/s]

Test round: 60%|██████ | 18/30 [00:01<00:00, 16.92batch/s]

Test round: 67%|██████▋ | 20/30 [00:01<00:00, 17.08batch/s]

Test round: 73%|███████▎ | 22/30 [00:01<00:00, 16.04batch/s]

Test round: 80%|████████ | 24/30 [00:01<00:00, 17.02batch/s]

Test round: 87%|████████▋ | 26/30 [00:01<00:00, 16.21batch/s]

Test round: 93%|█████████▎| 28/30 [00:01<00:00, 15.74batch/s]

Test round: 100%|██████████| 30/30 [00:01<00:00, 15.47batch/s]

test accuracy:0.6594, loss:1.07104

Save model at ./data/train_0.pth...

Test round: 0%| | 0/30 [00:00<?, ?batch/s]

Test round: 33%|███▎ | 10/30 [00:00<00:00, 95.64batch/s]

Test round: 73%|███████▎ | 22/30 [00:00<00:00, 106.34batch/s]

test accuracy:0.6599, loss:1.07029

Modle inference for single sample¶

Method 1

# You may aslo load your own trained model by setting the path

# samosa_model.load_state_dict(torch.load('path_to_model')) # the path for the model

spectrogram,activity= samosa_testdataset.__getitem__(3)

samosa_model.eval()

#Direct use the model for sample inference

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

predicted_result = samosa_model(spectrogram.unsqueeze(0).float().to(device))

#print("The ground truth is {}, while the predicted activity is {}".format(activity,torch.argmax(predicted_result).cpu()))

# Method 2

# Use inference.predict

from pysensing.acoustic.inference.predict import *

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

predicted_result = har_predict(spectrogram,'SAMoSA',samosa_model, device)

print("The ground truth is {}, while the predicted activity is {}".format(activity,torch.argmax(predicted_result).cpu()))

The ground truth is 18, while the predicted activity is 18

Modle inference for single sample¶

from pysensing.acoustic.inference.embedding import *

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

sample_embedding = har_embedding(spectrogram,'SAMoSA',samosa_model, device=device)

Implementation of “AudioIMU: Enhancing Inertial Sensing-Based Activity Recognition with Acoustic Models”¶

This dataset is designed to use audio and IMU data collected by a watch to predict the actions of the users, 23 different activities are collected in the dataset.

But different from the orginal paper, the reimplemeted paper only takes the audio data for human activity recognition. Subjects 01, 02, 03, 04 are used for testing while the other are used for training.

Load the data¶

# Method 1: Use get_dataloader

from pysensing.acoustic.datasets.get_dataloader import *

train_loader,test_loader = load_har_dataset(

root='./data',

dataset='audioimu',

download=True)

# Method2

root = './data' # The path contains the audioimu dataset

audioimu_traindataset = har_datasets.AudioIMU(root,'train')

audioimu_testdataset = har_datasets.AudioIMU(root,'test')

# Define the Dataloader

audioimu_trainloader = DataLoader(audioimu_traindataset,batch_size=64,shuffle=False,drop_last=True)

audioimu_testloader = DataLoader(audioimu_testdataset,batch_size=64,shuffle=False,drop_last=True)

#List the activity classes in the dataset

dataclass = audioimu_traindataset.classlist

# Example of the samples in the dataset

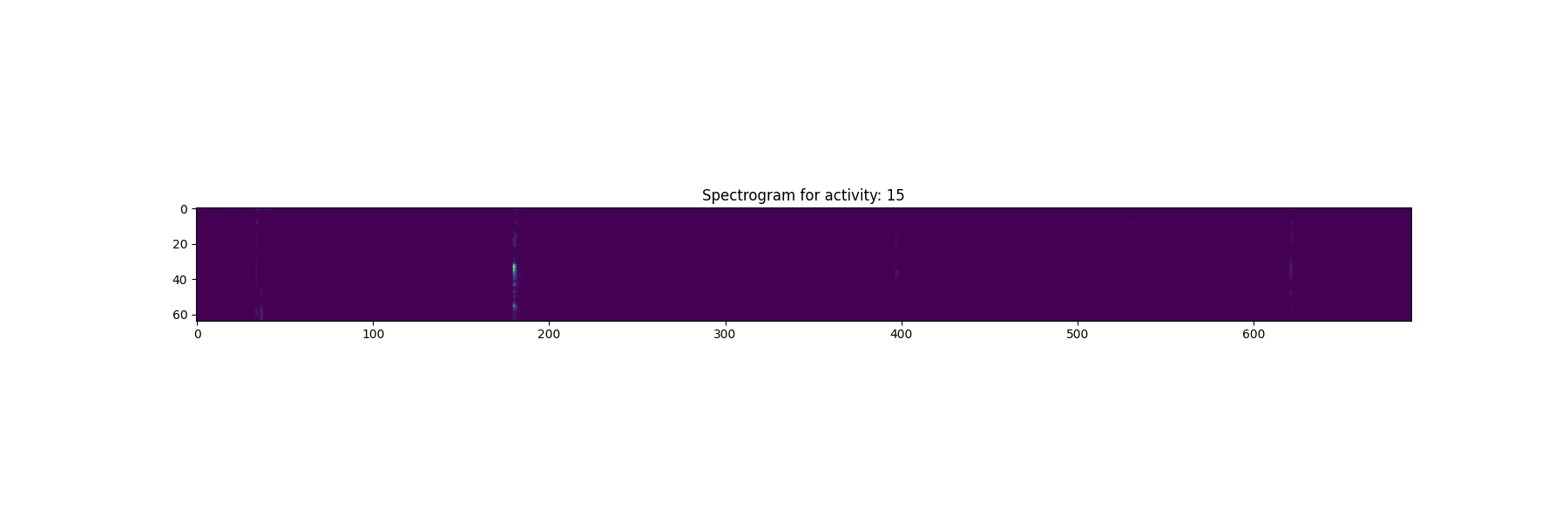

index = 0 # Randomly select an index

spectrogram,activity= audioimu_testdataset.__getitem__(index)

print(spectrogram.shape)

plt.figure(figsize=(18,6))

plt.imshow(spectrogram.numpy()[0])

plt.title("Spectrogram for activity: {}".format(activity))

plt.show()

using dataset: AudioImu

torch.Size([1, 64, 690])

Load the model¶

# Method 1

audio_model = har_models.HAR_AUDIOCNN().to(device)

# Method2

audio_model = acoustic_models.load_har_model('audiocnn',pretrained=True).to(device)

Model training and testing¶

from pysensing.acoustic.inference.training.har_train import *

epoch = 1

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(audio_model.parameters(), 0.0001)

loss = har_train_val(audio_model,audioimu_trainloader,audioimu_testloader, epoch, optimizer, criterion, device, save_dir='./data',save = False)

# Model testing

test_loss = har_test(audio_model,audioimu_testloader,criterion,device)

Train round0/1: 0%| | 0/8 [00:00<?, ?batch/s]

Train round0/1: 12%|█▎ | 1/8 [00:03<00:24, 3.46s/batch]

Train round0/1: 25%|██▌ | 2/8 [00:04<00:10, 1.81s/batch]

Train round0/1: 38%|███▊ | 3/8 [00:04<00:06, 1.28s/batch]

Train round0/1: 50%|█████ | 4/8 [00:05<00:04, 1.03s/batch]

Train round0/1: 62%|██████▎ | 5/8 [00:06<00:02, 1.12batch/s]

Train round0/1: 75%|███████▌ | 6/8 [00:06<00:01, 1.24batch/s]

Train round0/1: 88%|████████▊ | 7/8 [00:07<00:00, 1.32batch/s]

Train round0/1: 100%|██████████| 8/8 [00:07<00:00, 1.39batch/s]

Epoch:1, Accuracy:0.9727,Loss:0.082635007

Test round: 0%| | 0/15 [00:00<?, ?batch/s]

Test round: 7%|▋ | 1/15 [00:00<00:07, 1.79batch/s]

Test round: 13%|█▎ | 2/15 [00:01<00:07, 1.81batch/s]

Test round: 20%|██ | 3/15 [00:01<00:06, 1.81batch/s]

Test round: 27%|██▋ | 4/15 [00:02<00:06, 1.77batch/s]

Test round: 33%|███▎ | 5/15 [00:02<00:05, 1.79batch/s]

Test round: 40%|████ | 6/15 [00:03<00:04, 1.80batch/s]

Test round: 47%|████▋ | 7/15 [00:03<00:04, 1.82batch/s]

Test round: 53%|█████▎ | 8/15 [00:04<00:03, 1.82batch/s]

Test round: 60%|██████ | 9/15 [00:04<00:03, 1.82batch/s]

Test round: 67%|██████▋ | 10/15 [00:05<00:02, 1.81batch/s]

Test round: 73%|███████▎ | 11/15 [00:06<00:02, 1.81batch/s]

Test round: 80%|████████ | 12/15 [00:06<00:01, 1.82batch/s]

Test round: 87%|████████▋ | 13/15 [00:07<00:01, 1.81batch/s]

Test round: 93%|█████████▎| 14/15 [00:07<00:00, 1.82batch/s]

Test round: 100%|██████████| 15/15 [00:08<00:00, 1.82batch/s]

test accuracy:0.5115, loss:5.93836

Model inference¶

#Method 1

# You may aslo load your own trained model by setting the path

# audio_model.load_state_dict(torch.load('path_to_model')) # the path for the model

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

spectrogram,activity= audioimu_testdataset.__getitem__(6)

audio_model.eval()

predicted_result = audio_model(spectrogram.unsqueeze(0).float().to(device))

#print("The ground truth is {}, while the predicted activity is {}".format(activity,torch.argmax(predicted_result).cpu()))

#Method 2

#Use inference.predict

from pysensing.acoustic.inference.predict import *

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

predicted_result = har_predict(spectrogram,'AudioIMU',audio_model, device)

print("The ground truth is {}, while the predicted activity is {}".format(activity,torch.argmax(predicted_result).cpu()))

The ground truth is 21, while the predicted activity is 21

Model embedding¶

from pysensing.acoustic.inference.embedding import *

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

sample_embedding = har_embedding(spectrogram,'AudioIMU',audio_model, device=device)

And that’s it. We’re done with our acoustic humna activity recognition tutorials. Thanks for reading.

Total running time of the script: (0 minutes 26.295 seconds)